SoftBank developing AI agents to make each worker like a ‘thousand-armed deity’

SoftBank boss Son Masayoshi said on Wednesday that his team is working on a first-of-its-kind system in which AI agents will be able to self-replicate

An AI expert has accused OpenAI of rewriting its history and being overly dismissive of safety concerns.

Former OpenAI policy researcher Miles Brundage criticized the company’s recent safety and alignment document published this week. The document describes OpenAI as striving for artificial general intelligence (AGI) in many small steps, rather than making “one giant leap,” saying that the process of iterative deployment will allow it to catch safety issues and examine the potential for misuse of AI at each stage.

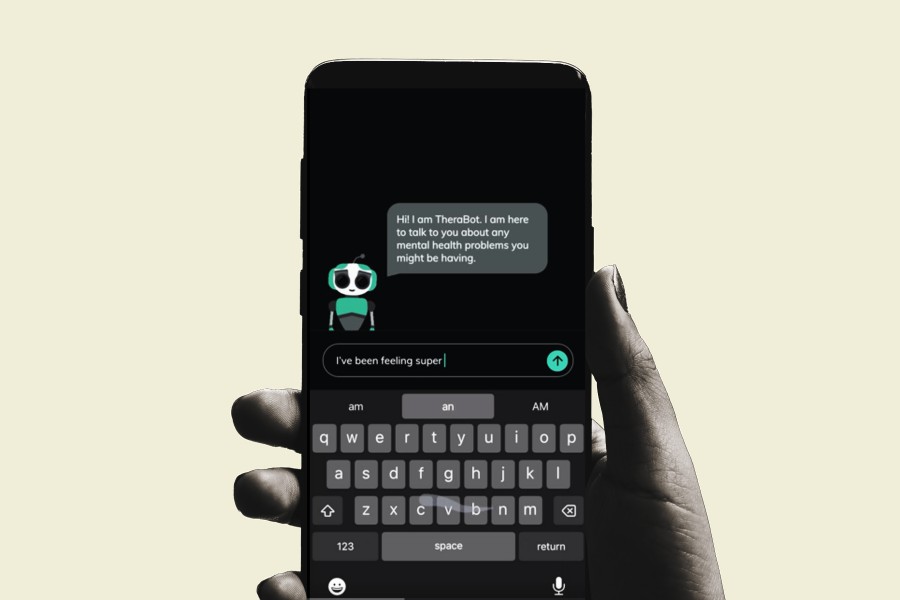

Among the many criticisms of AI technology like ChatGPT, experts are concerned that chatbots will give inaccurate information regarding health and safety (like the infamous issue with Google’s AI search feature which instructed people to eat rocks) and that they could be used for political manipulation, misinformation, and scams. OpenAI in particular has attracted criticism for lack of transparency in how it develops its AI models, which can contain sensitive personal data.

The release of the OpenAI document this week seems to be a response to these concerns, and the document implies that the development of the previous GPT-2 model was “discontinuous” and that it was not initially released due to “concerns about malicious applications,” but now the company will be moving toward a principle of iterative development instead. But Brundage contends that the document is altering the narrative and is not an accurate depiction of the history of AI development at OpenAI.

“OpenAI’s release of GPT-2, which I was involved in, was 100% consistent + foreshadowed OpenAI’s current philosophy of iterative deployment,” Brundage wrote on X. “The model was released incrementally, with lessons shared at each step. Many security experts at the time thanked us for this caution.”

Brundage also criticized the company’s apparent approach to risk based on this document, writing that, “It feels as if there is a burden of proof being set up in this section where concerns are alarmist + you need overwhelming evidence of imminent dangers to act on them – otherwise, just keep shipping. That is a very dangerous mentality for advanced AI systems.”

This comes at a time when OpenAI is under increasing scrutiny with accusations that it prioritizes “shiny products” over safety.

SoftBank boss Son Masayoshi said on Wednesday that his team is working on a first-of-its-kind system in which AI agents will be able to self-replicate

articiOver the past few years, AI has gone from limited chatbots to suddenly dominating the news cycle every single day. There are a range of AI chatb

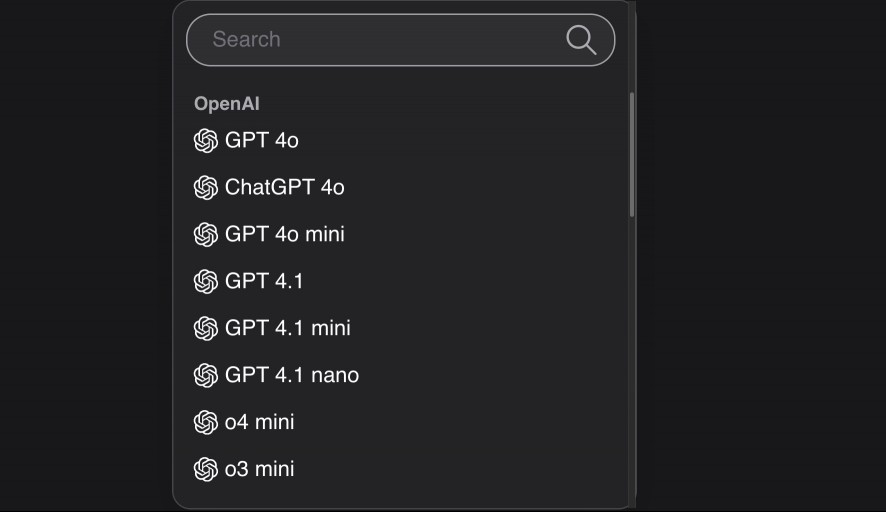

OpenAI has made GPT-4.1 more widely available, as ChatGPT Plus, Pro, and Team users can now access the AI model. On Wednesday, the brand announced tha

AI is being heavily pushed into the field of research and medical science. From drug discovery to diagnosing diseases, the results have been fairly en

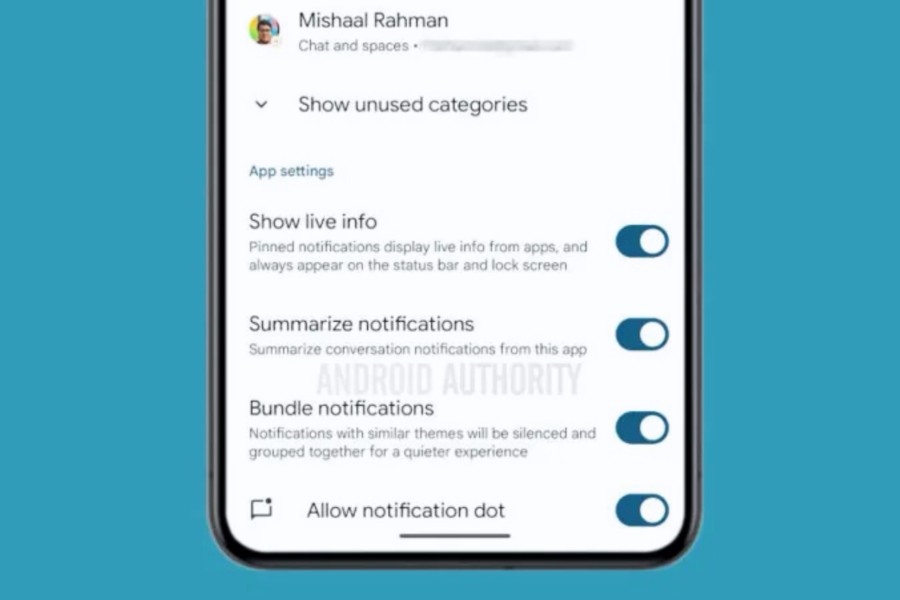

So far, Google has done an admirable job of putting generative AI tools on Android smartphones. Earlier today, it announced further refinements to how

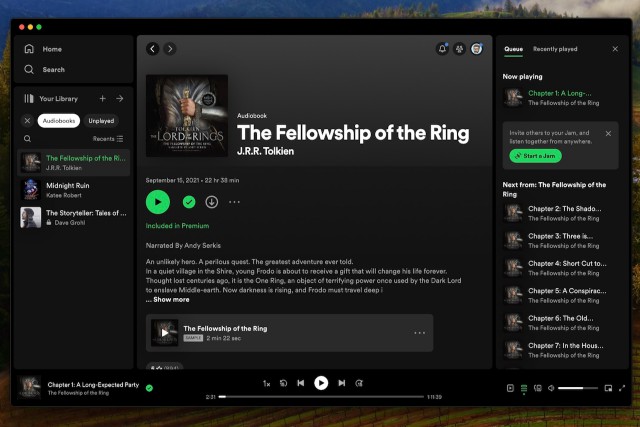

Derek Malcolm / Digital TrendsIn a move expected to dramatically increase the quantity of available audiobooks, Spotify announced on Thursday that wil

Mobile World Congress Read our complete coverage of Mobile World Congress The year 2025 has seen nearly every smartphone brand tout the virtues of art

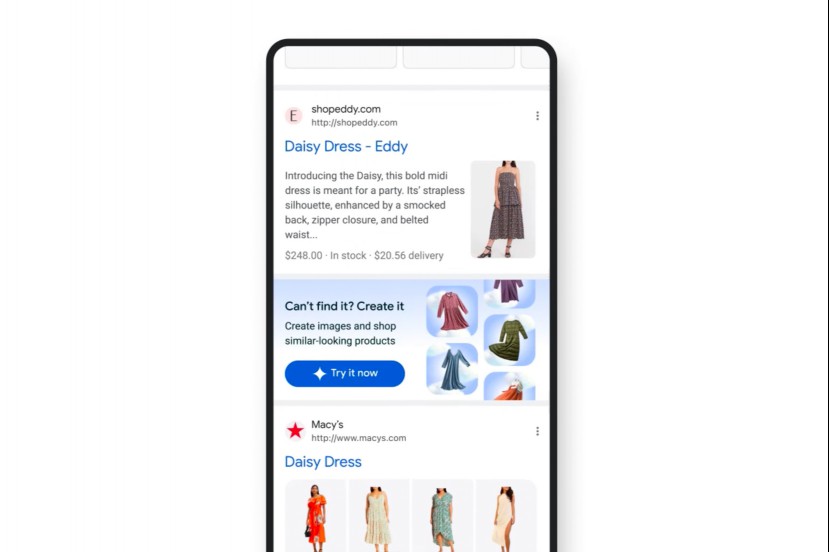

AI has been a part of the Google Shopping experience for a while now. In October last year, Google started showing an AI-generated brief with suggesti

We are a comprehensive and trusted information platform dedicated to delivering high-quality content across a wide range of topics, including society, technology, business, health, culture, and entertainment.

From breaking news to in-depth reports, we adhere to the principles of accuracy and diverse perspectives, helping readers find clarity and reliability in today’s fast-paced information landscape.

Our goal is to be a dependable source of knowledge for every reader—making information not only accessible but truly trustworthy. Looking ahead, we will continue to enhance our content and services, connecting the world and delivering value.